↧

Children Killed in Syrian Regime Chemical Attack

↧

Inspirational Urdu Quotes

↧

↧

Economy of China

The Socialist market economy [15] of People's Republic of China (PRC) is the world's second largest economy by nominal GDP and bypurchasing power parity after the United States.[1] It is the world's fastest-growing major economy, with growth rates averaging 10% over the past 30 years.[16] China is also the largest exporter and second largest importer of goods in the world.

On a per capita income basis, China ranked 87th by nominal GDP and 92nd by GDP (PPP) in 2012, according to the International Monetary Fund (IMF). The provinces in the coastal regions of China[17] tend to be more industrialized, while regions in the hinterland are less developed. As China's economic importance has grown, so has attention to the structure and health of the economy.[18][19] Xi Jinping’s Chinese Dream is described as achieving the “Two 100s”: the material goal of China becoming a “moderately well-off society” by about 2020, the 100th anniversary of the Chinese Communist Party, and the modernization goal of China becoming a fully developed nationby 2049, the 100th anniversary of the founding of the People’s Republic.[20]

As the Chinese economy is internationalized, so does the standardized economic forecast officially launched in China by Purchasing Managers Index in 2005.[21] Most economic growth of China is created from Special Economic Zones of the People's Republic of China.

Maoist era

By 1949, continuous foreign invasions, frequent revolutions and restorations, and civil wars had left the country with a fragile economy with little infrastructure. As Communist ascendancy seemed inevitable, almost all hard and foreign currency in China country were transported to Taiwan in 1948, making the war-time inflation even worse.

Since the formation of the PRC, an enormous effort was made towards creating economic growth and entire new industries were created. Tight control of budget and money supply reduced inflation by the end of 1950. Though most of it was done at the expense of suppressing the private sector of small to big businesses by the Three-anti/five-anti campaigns between 1951 to 1952. The campaigns were notorious for being anti-capitalist, and imposed charges that allowed the government to punish capitalists with severe fines.[22] In the beginning of the Communist party's rule, the leaders of the party had agreed that for a nation such as China, which does not have any heavy industry and minimal secondary production, capitalism is to be utilized to help the building of the "New China" and finally merged into communism.[23]

The new government nationalized the country's banking system and brought all currency and credit under centralized control. It regulated prices by establishing trade associations and boosted government revenues by collecting agricultural taxes. By the mid-1950s, the communists had ruined the country's railroad and highway systems, barely brought the agricultural and industrial production to their prewar levels, by bringing the bulk of China's industry and commerce under the direct control of the state.

Meanwhile, in fulfillment of their revolutionary promise, China's communist leaders completed land reform within two years of coming to power, eliminating landlords and redistribute their land and other possessions to peasant households.

Mao tried in 1958 to push China's economy to new heights. Under his highly touted "Great Leap Forward", agricultural collectives were reorganized into enormous communes where men and women were assigned in military fashion to specific tasks. Peasants were told to stop relying on the family, and instead adopted a system of communal kitchens, mess halls, and nurseries. Wages were calculated along the communist principle of "From each according to his ability, to each according to his need", and sideline production was banned as incipient capitalism. All Chinese citizens were urged to boost the country's steel production by establishing "backyard steel furnaces" to help overtake the West. The Great Leap Forward quickly revealed itself as a giant step backwards. Over-ambitious targets were set, falsified production figures were duly reported, and Chinese officials lived in an unreal world of miraculous production increases. By 1960, agricultural production in the countryside had slowed dangerously and large areas of China were gripped by a devastating famine.

For the next several years, China experienced a period of relative stability. Agricultural and industrial production returned to normal levels, and labor productivity began to rise. Then, in 1966, Mao proclaimed a Cultural Revolution to "put China back on track". Under orders to "Destroy the Four Olds" (old thoughts, culture, customs and habits), universities and schools closed their doors, and students, who became Mao's "Red Guards", were sent throughout the country to make revolution, beating and torturing anyone whose rank or political thinking offended. By 1969 the country had descended into anarchy, and factions of the Red Guards had begun to fight among themselves.Since 1949 the government, under socialist political and economic system, has been responsible for planning and managing the national economy.[55]In the early 1950s, the foreign trade system was monopolized by the state. Nearly all the domestic enterprises were state-owned and the government had set the prices for key commodities, controlled the level and general distribution of investment funds, determined output targets for major enterprises and branches, allocated energy resources, set wage levels and employment targets, operated the wholesale and retail networks, and steered thefinancial policy and banking system. In the countryside from the mid-1950s, the government established cropping patterns, set the level of prices, and fixed output targets for all major crops.

Since 1978 when economic reforms were instituted, the government's role in the economy has lessened by a great degree. Industrial output by state enterprises slowly declined, although a few strategic industries, such as the aerospace industry have today remained predominantly state-owned. While the role of the government in managing the economy has been reduced and the role of both private enterprise and market forces increased, the government maintains a major role in the urban economy. With its policies on such issues as agricultural procurement the government also retains a major influence on rural sector performance. The State Constitution of 1982 specified that the state is to guide the country's economic development by making broad decisions on economic priorities and policies, and that the State Council, which exercises executive control, was to direct its subordinate bodies in preparing and implementing the national economic plan and the state budget. A major portion of the government system (bureaucracy) is devoted to managing the economy in a top-down chain of command with all but a few of the more than 100 ministries, commissions, administrations, bureaus, academies, and corporations under the State Council being concerned with economic matters.

Each significant economic sector is supervised by one or more of these organizations, which includes the People's Bank of China, National Development and Reform Commission, Ministry of Finance, and the ministries of agriculture; coal industry; commerce; communications; education; light industry; metallurgical industry; petroleum industry; railways; textile industry; and water resources and electric power. Several aspects of the economy are administered by specialized departments under the State Council, including the National Bureau of Statistics, Civil Aviation Administration of China, and the tourism bureau. Each of the economic organizations under the State Council directs the units under its jurisdiction through subordinate offices at the provincial and local levels.

The whole policy-making process involves extensive consultation and negotiation.[56] Economic policies and decisions adopted by the National People's Congress and the State Council are to be passed on to the economic organizations under the State Council, which incorporates them into the plans for the various sectors of the economy. Economic plans and policies are implemented by a variety of direct and indirect control mechanisms. Direct control is exercised by designating specific physical output quotas and supply allocations for some goods and services. Indirect instruments—also called "economic levers"—operate by affecting market incentives. These included levying taxes, setting prices for products and supplies, allocating investment funds, monitoring and controlling financial transactions by the banking system, and controlling the allocation of key resources, such as skilled labor, electric power, transportation, steel, and chemicals (including fertilizers). The main advantage of including a project in an annual plan is that the raw materials, labor, financial resources, and markets are guaranteed by directives that have the weight of the law behind them. In reality, however, a great deal of economic activity goes on outside the scope of the detailed plan, and the tendency has been for the plan to become narrower rather than broader in scope. A major objective of the reform program was to reduce the use of direct controls and to increase the role of indirect economic levers. Major state-owned enterprises still receive detailed plans specifying physical quantities of key inputs and products from their ministries. These corporations, however, have been increasingly affected by prices and allocations that were determined through market interaction and only indirectly influenced by the central plan.

Total economic enterprise in China is apportioned along lines of directive planning (mandatory), indicative planning (indirect implementation of central directives), and those left to market forces. In the early 1980s during the initial reforms enterprises began to have increasing discretion over the quantities of inputs purchased, the sources of inputs, the variety of products manufactured, and the production process. Operational supervision over economic projects has devolved primarily to provincial, municipal, and county governments. The majority of state-owned industrial enterprises, which were managed at the provincial level or below, were partially regulated by a combination of specific allocations and indirect controls, but they also produced goods outside the plan for sale in the market. Important, scarce resources—for example, engineers or finished steel—may have been assigned to this kind of unit in exact numbers. Less critical assignments of personnel and materials would have been authorized in a general way by the plan, but with procurement arrangements left up to the enterprise management.

In addition, enterprises themselves are gaining increased independence in a range of activity. While strategically important industry and services and most of large-scale construction have remained under directive planning, the market economy has gained rapidly in scale every year as it subsumes more and more sectors.[57] Overall, the Chinese industrial system contains a complex mixture of relationships. The State Council generally administers relatively strict control over resources deemed to be of vital concern for the performance and health of the entire economy. Less vital aspects of the economy have been transferred to lower levels for detailed decisions and management. Furthermore, the need to coordinate entities that are in different organizational hierarchies generally causes a great deal of informal bargaining and consensus building.[57]

Consumer spending has been subject to a limited degree of direct government influence but is primarily determined by the basic market forces of income levels and commodity prices. Before the reform period, key goods were rationed when they were in short supply, but by the mid-1980s availability had increased to the point that rationing was discontinued for everything except grain, which could also be purchased in the free markets. Collectively owned units and the agricultural sector were regulated primarily by indirect instruments. Each collective unit was "responsible for its own profit and loss", and the prices of its inputs and products provided the major production incentives.

Vast changes were made in relaxing the state control of the agricultural sector from the late 1970s. The structural mechanisms for implementing state objectives—the people's communes and their subordinate teams and brigades—have been either entirely eliminated or greatly diminished.[58] Farm incentives have been boosted both by price increases for state-purchased agricultural products, and it was permitted to sell excess production on a free market. There was more room in the choice of what crops to grow, and peasants are allowed to contract for land that they will work, rather than simply working most of the land collectively. The system of procurement quotas (fixed in the form of contracts) has been being phased out, although the state can still buy farm products and control surpluses in order to affect market conditions.[59]

Foreign trade is supervised by the Ministry of Commerce, customs, and the Bank of China, the foreign exchange arm of the Chinese banking system, which controls access to the foreign currency required for imports. Ever since restrictions on foreign trade were reduced, there have been broad opportunities for individual enterprises to engage in exchanges with foreign firms without much intervention from official agencies.

Foreign investment

See also: Qualified Domestic Institutional Investor, Qualified Foreign Institutional Investor, China Investment Promotion Agency, China Council for the Promotion of International Trade, and Ministry of Commerce of the People's Republic of China

China's investment climate has changed dramatically with more than two decades of reform. In the early 1980s, China restricted foreign investments to export-oriented operations and required foreign investors to form joint-venture partnerships with Chinese firms. The Encouraged Industry Catalogue sets out the degree of foreign involvement allowed in various industry sectors. From the beginning of the reforms legalizing foreign investment, capital inflows expanded every year until 1999.[152] Foreign-invested enterprises account for 58–60% of China's imports and exports.[153]

Since the early 1990s, the government has allowed foreign investors to manufacture and sell a wide range of goods on the domestic market, eliminated time restrictions on the establishment of joint ventures, provided some assurances against nationalization, allowed foreign partners to become chairs of joint venture boards, and authorized the establishment of wholly foreign-owned enterprises, now the preferred form of FDI. In 1991, China granted more preferential tax treatment for Wholly Foreign Owned Enterprises and contractual ventures and for foreign companies, which invested in selected economic zones or in projects encouraged by the state, such as energy, communications and transportation.

China also authorized some foreign banks to open branches in Shanghai and allowed foreign investors to purchase special "B" shares of stock in selected companies listed on the Shanghai andShenzhen Securities Exchanges. These "B" shares sold to foreigners carried no ownership rights in a company. In 1997, China approved 21,046 foreign investment projects and received over $45 billion in foreign direct investment. China revised significantly its laws on Wholly Foreign-Owned Enterprises and China Foreign Equity Joint Ventures in 2000 and 2001, easing export performance and domestic content requirements.

Foreign investment remains a strong element in China's rapid expansion in world trade and has been an important factor in the growth of urban jobs. In 1998, foreign-invested enterprises produced about 40% of China's exports, and foreign exchange reserves totalled about $145 billion. Foreign-invested enterprises today produce about half of China's exports (the majority of China's foreign investment come from Hong Kong, Macau and Taiwan), and China continues to attract large investment inflows. However, the Chinese government's emphasis on guiding FDI into manufacturing has led to market saturation in some industries, while leaving China's services sectors underdeveloped. From 1993 to 2001, China was the world's second-largest recipient of foreign direct investment after the United States. China received $39 billion FDI in 1999 and $41 billion FDI in 2000. China is now one of the leading FDI recipients in the world, receiving almost $80 billion in 2005 according to World Bank statistics. In 2006, China received $69.47 billion in foreign direct investment.[154]

Foreign exchange reserves totaled $155 billion in 1999 and $165 billion in 2000. Foreign exchange reserves exceeded $800 billion in 2005, more than doubling from 2003. Foreign exchange reserves were $819 billion at the end of 2005, $1.066 trillion at the end of 2006, $1.9 trillion by June 2008. In addition, by the end of September 2008 China replaced Japan for the first time as the largest foreign holder of US treasury securities with a total of $585 billion, vs Japan $573 billion. China has now surpassed those of Japan, making China's foreign exchange reserves the largest in the world.

As part of its WTO accession, China undertook to eliminate certain trade-related investment measures and to open up specified sectors that had previously been closed to foreign investment. New laws, regulations, and administrative measures to implement these commitments are being issued. Major remaining barriers to foreign investment include opaque and inconsistently enforced laws and regulations and the lack of a rules-based legal infrastructure. Warner Bros., for instance, withdrew its cinema business in China as a result of a regulation that requires Chinese investors to own at least a 51 percent stake or play a leading role in a foreign joint venture.[155]

↧

Highest Sixes in International Cricket

↧

Pakistan Real Leaders

↧

↧

The foreign exchange market

The foreign exchange market (forex, FX, or currency market) is a global decentralized market for the trading of currencies. The main participants in this market are the larger international banks. Financial centers around the world function as anchors of trading between a wide range of different types of buyers and sellers around the clock, with the exception of weekends. EBS and Reuters' dealing 3000 are two main interbank FX trading platforms. The foreign exchange market determines the relative values of different currencies.[1]

The foreign exchange market (forex, FX, or currency market) is a global decentralized market for the trading of currencies. The main participants in this market are the larger international banks. Financial centers around the world function as anchors of trading between a wide range of different types of buyers and sellers around the clock, with the exception of weekends. EBS and Reuters' dealing 3000 are two main interbank FX trading platforms. The foreign exchange market determines the relative values of different currencies.[1]The foreign exchange market works through financial institutions, and it operates on several levels. Behind the scenes banks turn to a smaller number of financial firms known as “dealers,” who are actively involved in large quantities of foreign exchange trading. Most foreign exchange dealers are banks, so this behind-the-scenes market is sometimes called the “interbank market,”although a few insurance companies and other kinds of financial firms are involved. Trades between foreign exchange dealers can be very large, involving hundreds of millions of dollars.[citation needed] Because of the sovereignty issue when involving two currencies, Forex has little (if any) supervisory entity regulating its actions.

The foreign exchange market assists international trade and investment by enabling currency conversion. For example, it permits a business in the United States to import goods from the European Union member states, especially Eurozone members, and pay euros, even though its income is in United States dollars. It also supports direct speculation in the value of currencies, and the carry trade, speculation based on the interest rate differential between two currencies.[2]

In a typical foreign exchange transaction, a party purchases some quantity of one currency by paying some quantity of another currency. The modern foreign exchange market began forming during the 1970s after three decades of government restrictions on foreign exchange transactions (the Bretton Woods system of monetary management established the rules for commercial and financial relations among the world's major industrial states after World War II), when countries gradually switched to floating exchange rates from the previous exchange rate regime, which remained fixed as per theBretton Woods system.

The foreign exchange market is unique because of the following characteristics:

· its huge trading volume representing the largest asset class in the world leading to high liquidity;

· its geographical dispersion;

· its continuous operation: 24 hours a day except weekends, i.e., trading from 20:15 GMT on Sunday until 22:00 GMT Friday;

· the variety of factors that affect exchange rates;

· the low margins of relative profit compared with other markets of fixed income; and

· the use of leverage to enhance profit and loss margins and with respect to account size.

As such, it has been referred to as the market closest to the ideal of perfect competition, notwithstanding currency intervention by central banks. According to the Bank for International Settlements,[3] as of April 2010, average daily turnover in global foreign exchange markets is estimated at $3.98 trillion, a growth of approximately 20% over the $3.21 trillion daily volume as of April 2007. Some firms specializing on foreign exchange market had put the average daily turnover in excess of US$4 trillion.[4]

The $3.98 trillion break-down is as follows:

· $1.490 trillion in spot transactions

· $475 billion in outright forwards

· $1.765 trillion in foreign exchange swaps

· $43 billion currency swaps

· $207 billion in options and other products

↧

Syria Chemical Attack

The August 2013 Ghouta chemical attack refers to a series of alleged chemical attacks on Wednesday, 21 August 2013, in the Ghouta region of the Rif Dimashq Governorate of Syria. Opposition sources gave a death toll of 322[2] to 1,729.[8] According to the Syrian Observatory for Human Rights (SOHR), which gave the lowest estimate of 322 killed,[2] 46 of the dead were rebel fighters.[10] The attacks have so far not been independently confirmed due to difficulty of movement on the ground,[11] and the Syrian government denied that chemical attacks occurred.[12] If confirmed, it would be the deadliest chemical attack since the Halabja poison gas attack in 1988.[13][14]

The Syrian government prevented United Nations investigators from reaching the sites of the attacks,[15][16] despite their accommodations being only a few kilometers away.[17] On 23 August, American and European security sources made a preliminary assessment that chemical weapons were used by Syrian forces, likely with high-level approval from the government of President Bashar al-Assad

The attacks reportedly occurred around 03:00 in the morning on 21 August 2013,[12] in the rebel-held, and mostly Sunni Muslim,[19] Ghouta agricultural area, just east of Damascus, which had been under an Army siege, backed by Hezbollah,[20][21] for months. The towns attacked were: Hammuriyah, Irbin, Saqba, Kafr Batna, Mudamiyah,[5] Harasta, Zamalka and Ain Terma.[22] An attack was also reported in the rebel-held Damascus suburb of Jobar.[23] Some of the victims died while sleeping.[19]

On 21 August, the LCC claimed that of the 1,338 victims, 1,000 were in Zamalka, among which 600 bodies were transferred to medical points in other towns and 400 remained at a Zamalka medical centre.[7] At least six medics died while treating the victims.[24]

The attack came almost exactly one year after U.S. President Barack Obama's "red line" speech.[25] According to a Jerusalem Post correspondent, the attack occurred after "the US and its allies concluded months ago that, since at least Christmas of last year, Syria’s nominal president Bashar Assad has tested chemical weapons intermittently on his own people."[26]

The day after the alleged chemical attacks, on 22 August, the area of Ghouta was bombarded by the Syrian army.[27]

Timing

The BBC News interpreted darkness and prayer calls in videos to be consistent with a pre-dawn timing of the attacks. BBC News considered it significant that the "three main Facebook pages of Syrian opposition groups" reported "fierce clashes between FSA rebels and government forces, as well as shelling by government forces" at 01:15 local time (UTC+3) on 21 August 2013 in the eastern Gouta areas that were later claimed to have been attacked with chemical weapons.[28]

BBC News stated that an hour and a half later, the same three Facebook pages reported the first claims of chemical weapons use, within a few minutes of one another. At 02:45 UTC+3, the Ein Tarma Co-ordination Committee stated that "a number of residents died in suffocation cases due to chemical shelling of the al-Zayniya area [in Ein Tarma]." At 02:47, the Sham News Network reported an "urgent" message that Zamalka had been attacked with chemical weapons shells. At 02:55, the LCC made "a similar report."[28] The Los Angeles Times timed the attacks at "about" 03:00.[12]

Witness statements

Symptoms

Witness statements to The Guardian about symptoms included "people who were sleeping in their homes [who] died in their beds," headaches and nausea, "foam coming out of [victims'] mouths and noses," a "smell something like vinegar and rotten eggs," suffocation, "bodies [that] were turning blue," a "smell like cooking gas" and redness and itching of the eyes.[29]Richard Spencer of The Telegraph summarised witness statements, stating, "The poison ... may have killed hundreds, but it has left twitching, fainting, confused but compelling survivors."[30] Symptoms reported by Ghouta residents and doctors to Human Rights Watch included "suffocation, muscle spasms and frothing at the mouth, which are consistent with nerve agent poisoning."[15]

Syrian human rights lawyer Razan Zaitouneh, present in Eastern Ghouta, stated, "Hours [after the shelling], we started to visit the medical points in Ghouta to where injured were removed, and we couldn't believe our eyes. I haven't seen such death in my whole life. People were lying on the ground in hallways, on roadsides, in hundreds."[31]

Médecins Sans Frontières (MSF) stated that in three hospitals in the area with which it has "a strong and reliable collaboration", about "3600 patients displaying neurotoxic symptoms [were received] in less than three hours on the morning" of 21 August, among which 355 died. Symptoms listed by MSF included "convulsions, excess saliva, pinpoint pupils, blurred vision and respiratory distress". MSF Director of Operations Bart Janssens stated that MSF "can neither scientifically confirm the cause of these symptoms nor establish who is responsible for the attack. However, the reported symptoms of the patients, in addition to the epidemiological pattern of the events—characterised by the massive influx of patients in a short period of time, the origin of the patients, and the contamination of medical and first aid workers—strongly indicate mass exposure to a neurotoxic agent."[32]

Delivery method

Abu Omar of the Free Syrian Army stated to The Guardian that the rockets involved in the attack were unusual because "you could hear the sound of the rocket in the air but you could not hear any sound of explosion" and no obvious damage to buildings occurred.[29] Human Rights Watch's witnesses reported "symptoms and delivery methods consistent with the use of chemical nerve agents."[15]

Activists and local residents contacted by The Guardian said that "the remains of 20 rockets [thought to have been carrying neurotoxic gas were] found in the affected areas. Many [remained] mostly intact, suggesting that they did not detonate on impact and potentially dispersed gas before hitting the ground."[33]

Intelligence reports

On 23 August, US officials stated that American intelligence detected activity at Syrian chemical weapons sites before the attack on 21 August.[34]

Analysis of videos

Experts who have analysed the first video said it shows the strongest evidence yet consistent with the use of a lethal toxic agent. The evidence was so compelling that it convinced experts who had previously raised questions over the authenticity of previous claims or who had highlighted contradictions, one of them being Jean Pascal Zanders, a former analyst from the Stockholm International Peace Research Institute.[35]

Visible symptoms reportedly included rolling eyes, foaming at the mouth, and tremors. There was at least one image of a child suffering miosis, the pin-point pupil effect associated with the nerve agent Sarin, a powerful neurotoxin reportedly used before in Syria. Ralph Trapp, a former scientist at the Organisation for the Prohibition of Chemical Weapons, said the footage showed how a chemical weapons attack on a civilian area would look like, and went on to note "This is one of the first videos I've seen from Syria where the numbers start to make sense. If you have a gas attack you would expect large numbers of people, children and adults, to be affected, particularly if it's in a built-up area."[35]

According to a report by The Telegraph, "videos uploaded to YouTube by activists showed rows of motionless bodies and medics attending to patients apparently in the grip of seizures. In one piece of footage, a young boy appeared to be foaming at the mouth while convulsing."[1]

Hamish de Bretton-Gordon, a former commander of British Chemical and Biological counterterrorism forces, told BBC that the images were very similar to previous incidents he had witnessed, although he could not verify the footage.[36]

Analysis, verifiability, doubts and speculations

Physical arguments

CNN noted that some opposition activists claimed the use of "Agent 15," also known as BZ, in the attacks, for which some experts express doubt the Syrian government possesses, and the symptoms caused by said chemical are very different from the symptoms reported in this attack. Independent experts who studied the flood of online videos, which appeared on the morning of the attacks, were unsure of the cause of the deaths. Gwyn Winfield, editorial director at the magazine CBRNe World, which reports on chemical, biological, radiological, nuclear or explosives use, analyzed the videos and wrote on the magazine's site: "Clearly respiratory distress, some nerve spasms and a half-hearted washdown (involving water and bare hands?), but it could equally be a riot control agent as a (chemical warfare agent)." Some analysts speculated that a stockpile of chemical agents may have been hit by shelling, whether controlled by the rebels or the government.[23] After an analysis a professor of microbiology, who watched the videos, concluded with a "best guess" that the videos were indicative of the aftermath of an attack with some incapacitating chemical agent, but probably not sarin gas or a similar weapon, as they would have left signs of visible blistering.[37]

General

On 22 August, the United States said they were unable to conclusively say that chemical weapons were used in the alleged attack. U.S. President Barack Obama directed U.S. intelligence agencies to urgently help verify the allegations.[38] On August 23, American and European security sources made a preliminary assessment that chemical weapons were used by Syrian forces, likely with high-level approval from the government of President Bashar al-Assad.[18]

Motives

Some also pointed to the question of motive and timing, if government forces were responsible, since the hotel in which the team of United Nations chemical weapons inspectors were staying was just a few miles from the attack. A CNN reporter pointed to the fact that government forces did not appear to be in imminent danger of being overrun by rebels in the areas in question, in which a stalemate had set. He questioned why the Army would risk such an action that could cause international intervention. The reporter also questioned if the Army would use sarin gas just a few kilometers from the center of Damascus on what was a windy day.[23]

A reporter for The Telegraph also pointed to the questionable timing given government forces had recently beaten back rebels in some areas around Damascus and recaptured territory. "Using chemical weapons might make sense when he is losing, but why launch gas attacks when he is winning anyway?" The reporter also questioned why would the attacks happen just three days after the inspectors arrived in Syria.[39] Bloomberg news offered an opinion to the question "why would the Assad regime launch its biggest chemical attack on rebels and civilians precisely at the moment when a UN inspection team was parked in Damascus? The answer to that question is easy: Because Assad believes that no one–not the UN, not President Obama, not other Western powers, not the Arab League–will do a damn thing to stop him."[40]

Israeli news reporter Ron Ben-Yishai stated that the motive to use chemical weapons could be the "army's inability to seize the rebel's stronghold in Damascus' eastern neighbourhoods." He also speculated that this coupled with FSA planning to expand the area in their control and advance towards the centre of the capital which, in his opinion, pressured the government to use chemical weapons against civilians in these neighbourhoods with the goal of deterring the FSA fighters who seek shelter inside residential homes and operate from within them.[41]

Syrian human rights lawyer Razan Zaitouneh stated that the Assad government "would [not] care about [using] chemical weapons, [since] it knows that the international community would not do anything about it, like it did nothing about all the previous crimes the regime committed against its people. ... why [would] the regime [not] care to use [chemical] weapons or any kind of weapons to stop the progress of the Syrian Free Army from the capital, Damascus?"[31].[18]

↧

Massacres in Syria

2011

- Between 31 July and 4 August 2011, during the Siege of Hama, Syrian government forces reportedly killed more than 100 people in an assault on the city of Hama. Opposition activists later raised their estimated civilian death toll to 200 dead.[1]

- Between 19 and 20 December 2011, a massacre occurred in the Jabal al-Zawiya mountains of Idlib Governorate. The killings started after a large group of soldiers tried to defect from Army positions over the border to Turkey. Intense clashes between the military and the defectors, who were supported by other rebel fighters, erupted. After two days of fighting, 235 defectors, 100 pro-government soldiers and 120 civilians were killed.[2]

2012

- On 27 February 2012, during the 2012 Homs offensive, the Syrian Observatory for Human Rights reported that 68 bodies were found between the villages of Ram al-Enz and Ghajariyeh and were taken to the central hospital of Homs. The wounds showed that some of the dead were shot while others were killed by cutting weapons. The Local Coordination Committees reported that 64 dead bodies were found. These two sources hypothesized that the victims were civilians who tried to flee the battle in Homs and were then killed by a pro-government militia.[3][4]

- On 9 March 2012, during the 2012 Homs offensive, 30 tanks of the Syrian Army entered the quarter of Karm al-Zeitoun.[5] After this, it was reported that the Syrian Army had massacred 47 women and children in the district (26 children and 21 women), some of whom had their throats slit, according to activists. The opposition claimed that the main perpetrators behind the killings were the government paramilitary force the Shabiha.[6]

- On 5 April, the military captured Taftanaz's city center after a two-hour battle, following which the army reportedly carried out a massacre by rounding up and executing people. At least 62 people were killed. It was unknown how many were opposition fighters and how many were civilians.[7][8][9]

- On 25 May 2012, the Houla massacre occurred in two opposition-controlled villages in the Houla Region of Syria, a cluster of villages north of Homs. According to the United Nations, 108 people were killed, including 34 women and 49 children. UN investigators have reported that witnesses and survivors stated that the massacre was committed by pro-government Shabiha.[10] The Syrian government alleged that Al-Qaeda terrorist groups were responsible for the killings, and that Houla residents were warned not to speak publicly by opposition forces.[11][12]

- On 29 May 2012, a mass execution was discovered near the eastern city of Deir ez-Zor. The unidentified corpses of 13 men had been discovered shot to death execution-style.[13] On 5 June 2012, the rebel Al-Nusra Front claimed responsibility for the killings, stating that they had captured and interrogated the soldiers in Deir ez-Zor and "justly" punished them with death, after they confessed to crimes.[14]

- On 31 May 2012, there were reports of a massacre in the Syrian village of al-Buwaida al-Sharqiya. According to sources, 13 factory workers had been rounded up and shot dead by pro-government forces.[15] Syrian government sources blamed rebel forces for the killings.[16]

- On 6 June 2012, the Al-Qubeir massacre occurred in the small village of Al-Qubeir near Hama. According to preliminary evidence, troops had surrounded the village which was followed by pro-government Shabiha militia entering the village and killing civilians with "barbarity," UN Secretary-General Ban Ki-moon told the UN Security Council.[17] The death toll, according to opposition activists, was estimated to be between 55 and 68.[18] Activists, and witnesses,[19] stated that scores of civilians, including children, had been killed by Shabiha militia and security forces, while the Syrian government said that nine people had been killed by "terrorists".[20]

- On 23 June 2012, 25 Shabiha militiamen were killed by Syrian rebels in the city of Daret Azzeh. They were part of a larger group kidnapped by the rebels. The fate of the others kidnapped was unknown.[21] Many of the corpses of the shabiha militia killed were in military uniform.[22]

- Between 20 and 25 August 2012, the Darayya massacre was reported in the town of Darayya in the Rif Dimashq province. Between 320[23] and 500[24] people were killed in a five-day Army assault on the town, which was rebel-held. At least 18 of the dead were identified as rebels.[25] According to the opposition, Human Rights Watch and some local residents the killings were committed by the Syrian military and Shabiha militiamen.[26] According to the government and some local residents they were committed by rebel forces.[27]

- Between 8 and 13 october 2012, during the Battle of Maarrat Al-Nu'man, the Syrian Army was accused by the opposition of executing 65 people,[28] including 50 defecting soldiers.[29]

- On 11 December 2012, the Aqrab massacre, also known as the Aqrab bombings, occurred in the predominantly Alawite village of Aqrab, Hama Governorate. Between 125 and 200 people were reported killed or wounded, only 10 confirmed as dead, when rebel fighters threw bombs at a building in which hundreds of Alawite civilians, with some pro-government militiamen, were taking refuge from the fighting that had been raging in the town.[30][31] Most of the victims were Alawites.[32]

- On 23 December 2012, the Halfaya massacre occurred in the small town of Halfaya, where between 23[33][34] to 300[35] people were allegedly killed by bombing from warplanes. Reportedly, the civilians in the city of Halfaya were killed while queing for bread at a bakery.[35]BBC correspondent Jim Muir has noted that it is not conclusive from the video that the building was a bakery. He also noted that despite initial claims by rebels that many women and children were among the dead, of the 23 people identified as dead - all of them were men. Muir added: "it is not out of the question that regime jets managed to strike a concentration of rebel fighters."[33]

- On 24 December 2012, the Talbiseh bakery massacre took place in the town of Talbiseh. More than 14 people were killed by bombing from warplanes from the Syrian government.[36] The civilians in the city were killed while queuing for bread at a local bakery.[37]

2013

- On 15 January 2013, government troops stormed the village of Basatin al-Hasawiya on the outskirts of Homs city reportedly killing 106 civilians, including women and children, by shooting, stabbing or possibly burning them to death.[38]

- On 15 January 2013, twin explosions of unknown origin killed 87 people at Aleppo's university, many of them students attending exams. The government and opposition blamed each other for the explosions at the university.[38]

- Between 29 January and 14 March 2013, opposition activists claimed that they were able to fish out 230 bodies out of a river in Aleppo, accusing government forces of being the ones who executed the men since the bodies came down the river from the direction of government-held areas of the city. However, Human Rights Watch was able to identify only 147 of the victims, all male and aged between 11 and 64.[39]

- On 23 March 2013, an alleged chemical weapons attack was carried out in the town of Khan al-Assal, on the outskirts of Aleppo. 25–31 people were killed. Both the government and the rebels traded blame for the attack, although the opposition activist group the Syrian Observatory for Human Rights stated that 16 of the dead were government soldiers. According to the government, 21 of the dead were civilians, while 10 were soldiers. Russia supported the government's allegations, while the United States said there was no evidence of any attack at all.[40][41][42][43]

- Between 16 and 21 April, during the Battle of Jdaidet al-Fadl, the Syrian Army was accused by the opposition of carrying out a massacre. SOHR claimed that 250 people were killed since the start of the battle, with them being able to document, by name, 127 of the dead, including 27 rebels. Another opposition claim put the death toll at 450.[44][45][46] One activist source claimed he counted 98 bodies in the town's streets and 86 in makeshift clinics who were summarily executed. Another activist stated they documented 85 people who were executed, including 28 who were killed in a makeshift hospital.[47]

- Between 27 April and 5 July 2013, during the rebel siege of the Aleppo Central Prison more than 120 prisoners were killed. Most died due to malnutrition and lack of medical treatment due to the siege, as well as rebel bombardment on the prison. Some were also executed by government forces.[48][49] On 1 June, during the siege, an opposition activist group claimed 50 prisoners were executed by government forces, while another group reported that, up to that point, 40 government soldiers and 31 prisoners had been killed in rebel shelling of the prison complex.[50][51]

- Between 2 and 3 May 2013, the Bayda and Baniyas massacres occurred in which pro-government militiamen allegedly killed between 128 and 245 people in the Tartus Governorate, apparently in retaliation for an earlier rebel attack near the town that left at least half a dozen soldiers dead. State media stated their forces were seeking only to clear the area of "terrorists". In all, the military claimed that they killed 40 "terrorists" in Bayda and Baniyas.

- On 14 and 16 May 2013, two videos surfaced of the execution of government soldiers by Islamic extremists in eastern Syria. In one, members of the groups Islamic State of Iraq and Bilad al-Sham shot dead three prisoners in the middle of a square in Ar-Raqqah city, whom they alleged were Syrian Army officers.[52][53] It was later revealed that two of the three killed prisoners were not Syrian Army officers, but Alawite civilians. One was a dentist and the other was his nephew, a teacher.[54] The other video showed the Al-Nusra Front executing 11 government soldiers in the eastern Deir al-Zor province. That video is believed to had dated back to some time in 2012.[55]

- The Hatla massacre occurred on 11 June 2013, when Syrian rebels killed 60 Shi'ite villagers in the village of Hatla, near Deir el-Zour. The killings were reportedly in retaliation for an attack by Shi'ite pro-government fighters from the village, a day earlier, in which four rebels were killed. According to opposition activists, most of the dead were pro-government fighters but civilians were killed as well, including women and children. Rebels also burned civilian houses during the takeover. 10 rebel fighters were killed during the attack. 150 Shi'ite residents fled to the nearby government-held village of Jafra.

- On 18 June 2013, 20 people were killed in a Grad missile attack on the home of Parliament member Ahmad al-Mubarak, who is also the head of the Bani Izz clan, in the town of Abu Dala. Opposition activists claimed that he was killed by government forces. However, Ahmad al-Mubarak was a well-known government supporter and one of his aids was executed by rebels a week earlier.[56]

- On 22 and 23 July 2013, rebel forces attacked and captured the town of Khan al-Asal, west of Aleppo. During the takeover more than 150 soldiers were killed, including 51 soldiers and officers who were summarily executed after being captured. The incident was considered one of the worst mass executions by rebels in the war.[57][58] Several executions of soldiers in the village of Hara in the province of Deraa were also reported.[59]

- On 26 July 2013, 32 people, including 19 children and six women, were killed by an Army surface-to-surface missile fired at the Bab al-Nayrab neighbourhood of Aleppo city. The target was jihadist base, however the missile fell short and hit civilian buildings.[60]

- On 21 August, Syrian activists reported that government forces struck Jobar, Zamalka, 'Ain Tirma, and Hazzah in the Eastern Ghouta region with chemical weapons. They claimed at least 635 men, women and children were killed in the nerve gas attack. Unverified videos uploaded showed the victims, many of who were convulsing, as well as several dozen bodies lined up.[61]

↧

Victims of Sarin gas attack in Damascus

↧

↧

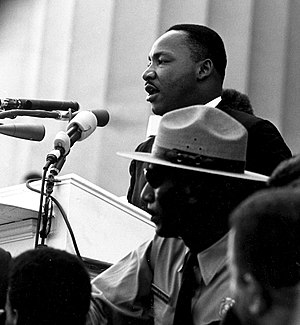

I Have a Dream Speech by Martin Luther King

|

| English: Dr. Martin Luther King giving his "I Have a Dream" speech during the March on Washington in Washington, D.C., on 28 August 1963. Español: Dr. Martin Luther King dando su discurso "Yo tengo un sueño" durante la Marcha sobre Washington por el trabajo y la libertad en Washington, D.C., 28 de agosto de 1963. (Photo credit: Wikipedia) |

"I Have a Dream" is a public speech delivered by American activist Martin Luther King, Jr. on August 28, 1963, in which he called for an end to racism in the United States. Delivered to over 250,000 civil rights supporters from the steps of the Lincoln Memorial during the March on Washington for Jobs and Freedom, the speech was a defining moment of the American Civil Rights Movement.[1]

Beginning with a reference to the Emancipation Proclamation, which freed millions of slaves in 1863,[2] King examines that: "one hundred years later, the Negro still is not free".[3] At the end of the speech, King departed from his prepared text for a partly improvised peroration on the theme of "I have a dream", possibly prompted by Mahalia Jackson's cry: "Tell them about the dream, Martin!"[4] In this part of the speech, which most excited the listeners and has now become the most famous, King described his dreams of freedom and equality arising from a land of slavery and hatred.[5] The speech was ranked the top American speech of the 20th century by a 1999 poll of scholars of public address

The March on Washington for Jobs and Freedom was partly intended to demonstrate mass support for the civil rights legislation proposed by President Kennedy in June. King and other leaders therefore agreed to keep their speeches calm, and to avoid provoking the civil disobedience which had become the hallmark of the civil rights movement. King originally designed his speech as a homage to Abraham Lincoln's Gettysburg Address, timed to correspond with the 100-year centennial of the Emancipation Proclamation.[5]

Speech title and the writing process

King had been preaching about dreams since 1960, when he gave a speech to the National Association for the Advancement of Colored People (NAACP) called "The Negro and the American Dream". This speech discusses the gap between the American dream and the American lived reality, saying that overt white supremacists have violated the dream, but also that "our federal government has also scarred the dream through its apathy and hypocrisy, its betrayal of the cause of justice". King suggests that "It may well be that the Negro is God’s instrument to save the soul of America."[7][8] He had also delivered a "dream" speech in Detroit, in June 1963, when he marched on Woodward Avenue with Walter Reuther and the Reverend C. L. Franklin, and had rehearsed other parts.[9]

The March on Washington Speech, known as "I Have a Dream Speech", has been shown to have had several versions, written at several different times.[10] It has no single version draft, but is an amalgamation of several drafts, and was originally called "Normalcy, Never Again." Little of this, and another "Normalcy Speech," ends up in the final draft. A draft of "Normalcy, Never Again" is housed in the Morehouse College Martin Luther King, Jr. Collection of Robert W. Woodruff Library of the Atlanta University Center and Morehouse College.[11] Our focus on "I have a dream," comes through the speech's delivery. Toward the end of its delivery, noted African American gospel singer Mahalia Jackson shouted to Dr. King from the crowd, "Tell them about the dream, Martin."[12] Dr. King stopped delivering his prepared speech and started "preaching", punctuating his points with "I have a dream."

The speech was drafted with the assistance of Stanley Levison and Clarence Benjamin Jones[13] in Riverdale, New York City. Jones has said that "the logistical preparations for the march were so burdensome that the speech was not a priority for us" and that "on the evening of Tuesday, Aug. 27, [12 hours before the March] Martin still didn't know what he was going to say".[14]

Leading up to the speech's rendition at the Great March on Washington, King had delivered its "I have a dream" refrains in his speech before 25,000 people in Detroit's Cobo Hall immediately after the 125,000-strong Great Walk to Freedom in Detroit, June 23, 1963.[15][16] After the Washington, D.C. March, a recording of King's Cobo Hall speech was released by Detroit's Gordy records as an LP entitled "The Great March To Freedom."[17]

The speech

Widely hailed as a masterpiece of rhetoric, King's speech invokes the Declaration of Independence, the Emancipation Proclamation, and the United States Constitution. Early in his speech, King alludes to Abraham Lincoln's Gettysburg Address by saying "Five score years ago..." King says in reference to the abolition of slavery articulated in the Emancipation Proclamation, "It came as a joyous daybreak to end the long night of their captivity." Anaphora, the repetition of a phrase at the beginning of sentences, is a rhetorical tool employed throughout the speech. An example of anaphora is found early as King urges his audience to seize the moment: "Now is the time..." is repeated four times in the sixth paragraph. The most widely cited example of anaphora is found in the often quoted phrase "I have a dream..." which is repeated eight times as King paints a picture of an integrated and unified America for his audience. Other occasions when King used anaphora include "One hundred years later," "We can never be satisfied," "With this faith," "Let freedom ring," and "free at last." King was the sixteenth out of eighteen people to speak that day, according to the official program.[18]

According to U.S. RepresentativeJohn Lewis, who also spoke that day as the president of the Student Nonviolent Coordinating Committee, "Dr. King had the power, the ability, and the capacity to transform those steps on the Lincoln Memorial into a monumental area that will forever be recognized. By speaking the way he did, he educated, he inspired, he informed not just the people there, but people throughout America and unborn generations."[19]

The ideas in the speech reflect King's social experiences of the mistreatment of blacks. The speech draws upon appeals to America's myths as a nation founded to provide freedom and justice to all people, and then reinforces and transcends those secular mythologies by placing them within a spiritual context by arguing that racial justice is also in accord with God's will. Thus, the rhetoric of the speech provides redemption to America for its racial sins.[20] King describes the promises made by America as a "promissory note" on which America has defaulted. He says that "America has given the Negro people a bad check", but that "we've come to cash this check" by marching in Washington, D.C.

Similarities and allusions

Further information: Martin Luther King, Jr. authorship issues

King's speech uses words and ideas from his own speeches and other texts. He had spoken about dreams, quoted from "My Country 'Tis of Thee", and of course referred extensively to the Bible, for years. The idea of constitutional rights as an "unfulfilled promise" was suggested by Clarence Jones.[7]

The closing passage from King's speech partially resembles Archibald Carey, Jr.'s address to the 1952 Republican National Convention: both speeches end with a recitation of the first verse of Samuel Francis Smith's popular patriotic hymn "America" (My Country ’Tis of Thee), and the speeches share the name of one of several mountains from which both exhort "let freedom ring".[7]

King also is said to have built on Prathia Hall's speech at the site of a burned-down church in Terrell County, Georgia in September 1962, in which she used the repeated phrase "I have a dream".[21]

It also alludes to Psalm 30:5[22] in the second stanza of the speech.

King also quotes from Isaiah 40:4-5—"I have a dream that every valley shall be exalted..."[23] Additionally, King alludes to the opening lines of Shakespeare's "Richard III" ("Now is the winter of our discontent/Made glorious summer...") when he remarks, "this sweltering summer of the Negro's legitimate discontent will not pass until there is an invigorating autumn..."

Responses

The speech was lauded in the days after the event, and was widely considered the high point of the March by contemporary observers.[24]James Reston, writing for the New York Times, said that “Dr. King touched all the themes of the day, only better than anybody else. He was full of the symbolism of Lincoln and Gandhi, and the cadences of the Bible. He was both militant and sad, and he sent the crowd away feeling that the long journey had been worthwhile.”[7] Reston also noted that the event "was better covered by television and the press than any event here since President Kennedy's inauguration," and opined that "it will be a long time before [Washington] forgets the melodious and melancholy voice of the Rev. Dr. Martin Luther King Jr. crying out his dreams to the multitude."[25] An article in the Boston Globe by Mary McGrory reported that King's speech "caught the mood" and "moved the crowd" of the day "as no other" speaker in the event.[26]Marquis Childs of The Washington Post wrote that King's speech "rose above mere oratory".[27] An article in the Los Angeles Times commented that the "matchless eloquence" displayed by King, "a supreme orator" of "a type so rare as almost to be forgotten in our age," put to shame the advocates of segregation by inspiring the "conscience of America" with the justice of the civil-rights cause.[28]

The Federal Bureau of Investigation (FBI) also noticed the speech, which provoked them to expand their COINTELPRO operation against the SCLC, and to target King specifically as a major enemy of the United States.[29] Two days after King delivered "I Have a Dream", Agent William C. Sullivan, the head of COINTELPRO, wrote a memo about King's growing influence:

In the light of King's powerful demagogic speech yesterday he stands head and shoulders above all other Negro leaders put together when it comes to influencing great masses of Negroes. We must mark him now, if we have not done so before, as the most dangerous Negro of the future in this Nation from the standpoint of communism, the Negro and national security.[30]

The speech was a success for the Kennedy administration and for the liberal civil rights coalition that had planned it. It was considered a "triumph of managed protest", and not one arrest relating to the demonstration occurred. Kennedy had watched King's speech on TV and been very impressed. Afterwards, March leaders accepted an invitation to the White House to meet with President Kennedy. Kennedy felt the March bolstered the chances for his civil rights bill.[31] Some of the more radical Black leaders who were present condemned the speech (along with the rest of the march)[citation needed] as too compromising. Malcolm X later wrote in his Autobiography: "Who ever heard of angry revolutionaries swinging their bare feet together with their oppressor in lily pad pools, with gospels and guitars and 'I have a dream' speeches?"[5]

Legacy

The March on Washington put pressure on the Kennedy administration to advance its civil rights legislation in Congress.[32] The diaries of Arthur M. Schlesinger, Jr., published posthumously in 2007, suggest that President Kennedy was concerned that if the march failed to attract large numbers of demonstrators, it might undermine his civil rights efforts.

In the wake of the speech and march, King was named Man of the Year by TIME magazine for 1963, and in 1964, he was the youngest person ever awarded the Nobel Peace Prize.[33] The speech did not appear in writing until August 1983, when a transcript was published in the Washington Post.[3]

In 2002, the Library of Congress honored the speech by adding it to the United States National Recording Registry.[34]

In 2003, the National Park Service dedicated an inscribed marble pedestal to commemorate the location of King's speech at the Lincoln Memorial.[35]

Copyright dispute

Because King's speech was broadcast to a large radio and television audience, there was controversy about the copyright status of the speech. If the performance of the speech constituted "general publication", it would have entered the public domain due to King's failure to register the speech with the Registrar of Copyrights. If the performance only constituted "limited publication", however, King retained common law copyright. This led to a lawsuit, Estate of Martin Luther King, Jr., Inc. v. CBS, Inc., which established that the King estate does hold copyright over the speech and had standing to sue; the parties then settled. Unlicensed use of the speech or a part of it can still be lawful in some circumstances, especially in jurisdictions under doctrines such as fair use or fair dealing. Under the applicable copyright laws, the speech will remain under copyright in the United States until 70 years after King's death, thus until 2038.

Original copy of the speech

↧

US Navy Battle Ships

The names of commissioned ships of the United States Navy all start with USS, meaning 'United States Ship'. Non-commissioned, civilian-manned vessels of the U.S. Navy have names that begin with USNS, standing for 'United States Naval Ship'. A letter-based hull classification symbol is used to designate a vessel's type. The names of ships are selected by the Secretary of the Navy. The names are that of states, cities, towns, important persons, important locations, famous battles, fish, and ideals. Usually, different types of ships have names originated from different types of sources.

Modern aircraft carriers and submarines use nuclear reactors for power. See United States Naval reactor for information on classification schemes and the history of nuclear-powered vessels.

Modern cruisers, destroyers and frigates are called Surface combatants and act mainly as escorts for aircraft carriers, amphibious assault ships, auxiliaries and civilian craft, but the largest ones have gained a land attack role through the use of cruise missiles and a population defense role through Missile defense.

↧

Fukushima nuclear disaster radiation effects

|

The radiation effects from the Fukushima Daiichi nuclear disaster are the observed and predicted effects resulting from the release of radioactive isotopes from the Fukushima Daiichi Nuclear Power Plant after the 2011 Tōhoku earthquake and tsunami. Radioactive isotopes were released from reactor containment vessels as a result of venting to reduce gaseous pressure, and the discharge of coolant water into the sea[citation needed]. This resulted in Japanese authorities implementing a 20 km exclusion zone around the power plant, and the continued displacement of approximately 156,000 people as of early 2013.[3] Trace quantities of radioactive particles from the incident, including iodine-131 and caesium-134/137, have since been detected around the world.[4][5][6] As of early 2013, no physical health effects due to radiation had been observed among the public or Fukushima Daiichi Nuclear Power Plant workers.[7][8]

In early 2013, The World Health Organization (WHO) released a comprehensive health risk assessment report which concluded that, for the general population inside and outside of Japan, the predicted health risks are small and that no observable increases in cancer rates above background rates are expected.[9] The report estimates an increase in risk for specific cancers for certain subsets of the population inside the Fukushima Prefecture. For the people in the most contaminated locations within the prefecture, this includes a 4% increase for solid cancers in females exposed as infants, a 6% increase in breast cancer in females exposed as infants, and a 7% increase in leukaemia for males exposed as infants. The risk of thyroid cancer in females exposed as infants has risen from a lifetime risk of 0.75% to 1.25%.

Preliminary dose-estimation reports by WHO and the United Nations Scientific Committee on the Effects of Atomic Radiation (UNSCEAR) indicate that future health effects due to the accident may not be statistically detectable. However, 167 plant workers received radiation doses that slightly elevate their risk of developing cancer.[10][11][12] Estimated effective doses from the accident outside of Japan are considered to be below (or far below) the dose levels regarded as very small by the international radiological protection community.[11] The United Nations Scientific Committee on the Effects of Atomic Radiation is expected to release a final report on the effects of radiation exposure from the accident by the end of 2013.[12]

A June 2012 Stanford University study estimated, using a linear no-threshold model, that the radiation release from the Fukushima Daiichi nuclear plant could cause 130 deaths from cancer globally (the lower bound for the estimater being 15 and the upper bound 1100) and 180 cancer cases in total (the lower bound being 24 and the upper bound 1800), most of which are estimated to occur in Japan. Radiation exposure to workers at the plant was projected to result in 2 to 12 deaths.[13] However, a December 2012 UNSCEAR statement to the Fukushima Ministerial Conference on Nuclear Safety advised that "[b]ecause of the great uncertainties in risk estimates at very low doses, UNSCEAR does not recommend multiplying very low doses by large numbers of individuals to estimate numbers of radiation-induced health effects within a population exposed to incremental doses at levels equivalent to or lower than natural background levels

Health effects

Preliminary dose-estimation reports by the World Health Organization and United Nations Scientific Committee on the Effects of Atomic Radiation indicate that 167 plant workers received radiation doses that slightly elevate their risk of developing cancer, but that it may not be statistically detectable.[10][11][12] Estimated effective doses from the accident outside of Japan are considered to be below (or far below) the dose levels regarded as very small by the international radiological protection community.[11]

According to the Japanese Government, 180,592 people in the general population were screened in March 2011 for radiation exposure and no case was found which affects health.[14] Thirty workers conducting operations at the plant had exposure levels greater than 100 mSv.[15] It is believed that the health effects of the radiation release are primarily psychological rather than physical effects. Even in the most severely affected areas, radiation doses never reached more than a quarter of the radiation dose linked to an increase in cancer risk. (25 mSv whereas 100 mSv has been linked to an increase in cancer rates among victims at Hiroshima and Nagasaki) However, people who have been evacuated have suffered from depression and other mental health effects.[16]

The negative health effects of the Fukushima nuclear disaster include thyroid abnormalities, infertility and an increased risk of cancer.[17][18][19] One study conducted by a research team in Fukushima, Japan found that more than a third (36%) of children in Fukushima have abnormal growths in their thyroid glands. Furthermore, a WHO report found that there is a significant increase in the risk of developing cancers for people who live near Fukushima.[17] This includes a 70% higher risk of developing thyroid cancer for newborn babies, a 7% higher risk of leukemia in males exposed as infants, a 6% higher risk of breast cancer in females exposed as infants and a 4% higher risk of developing solid cancers for females.[17] An increase in infertility has also been reported.[19]

As of August 2013, there have been more than 40 children newly diagnosed with thyroid cancer and other cancers in Fukushima prefecture alone and nuclear experts warn that this pattern may also occur in other areas of Japan.[20]

As of September 2011, six workers at the Fukushima Daiichi site have exceeded lifetime legal limits for radiation and more than 300 have received significant radiation doses.[21] Still, there were no deaths or serious injuries due to direct radiation exposures.

According to a June 2012 Stanford University study[unreliable source], the radiation released could cause 130 deaths from cancer (the lower bound for the estimater being 15 and the upper bound 1100) and 180 cancer cases (the lower bound being 24 and the upper bound 1800), mostly in Japan[unreliable source]. Radiation exposure to workers at the plant was projected to result in 2 to 12 deaths. The radiation released was an order of magnitude lower than that released from Chernobyl, and some 80% of the radioactivity from Fukushima was deposited over the Pacific Ocean; preventive actions taken by the Japanese government may have substantially reduced the health impact of the radiation release. An additional approximately 600 deaths have been reported due to non-radiological causes such as mandatory evacuations. Evacuation procedures after the accident may have potentially reduced deaths from radiation by 3 to 245 cases, the best estimate being 28; even the upper bound projection of the lives saved from the evacuation is lower than the number of deaths already caused by the evacuation itself.[13]

However, that estimate has been challenged, with some scientists arguing that accidents and pollution from coal or gas plants would have caused more lost years of life.[22]

The radiation emitted in Fukushima instigated evacuations of "16,000 people", which, according to a study, has also caused mental illness and psychological effects on these people. Stress, fatigue and people even being around other seriously ill people are some of the main contributors to the mental health of many of these individuals during the evacuation. The study also identifies how children are more susceptible to the radiation "because their cells are dividing more rapidly and radiation-damaged RNA may be carried in more generations of cells." In addition, DNA damage is also common among people with prolonged exposure to the radiation through "air, ground and food."[23]

According to the Wall Street Journal, some areas were exposed to about 2 rem of radiation and some other areas as much as 22 rems.[24]

A report by the World Health Organization published in February 2013 anticipated that there would be small noticeable increases in cancer rates for the overall population, but somewhat elevated rates for particular sub-groups. For example infants of Namie Town and Iitate Village were estimated to have a 6% increase in female breast cancer risk and a 7% increase in male leukaemia risk. A third of emergency workers involved in the accident would have increased cancer risks.[25]

Total emissions

On 24 May 2012, more than a year after the disaster, TEPCO released their estimate of radiation releases due to the Fukushima Daiichi Nuclear Disaster. An estimated 538,100 terabecquerels (TBq) of iodine-131, caesium-134 and caesium-137 was released. 520,000 TBq was released into the atmosphere between 12 to 31 March 2011 and 18,100 TBq into the ocean from 26 March to 30 September 2011. A total of 511,000 TBq of iodine-131 was released into both the atmosphere and the ocean, 13,500 TBq of caesium-134 and 13,600 TBq of caesium-137.[26] In May 2012, TEPCO reported that at least 900 PBq had been released "into the atmosphere in March last year [2011] alone"[27][28] up from previous estimates of 360-370 PBq total.

The primary releases of radioactive nuclides have been iodine and caesium;[29][30] strontium[31] and plutonium[32][33] have also been found. These elements have been released into the air via steam;[34] and into the water leaking into groundwater[35] or the ocean.[36] The expert who prepared a frequently cited Austrian Meteorological Service report asserted that the "Chernobyl accident emitted much more radioactivity and a wider diversity of radioactive elements than Fukushima Daiichi has so far, but it was iodine and caesium that caused most of the health risk – especially outside the immediate area of the Chernobyl plant."[29] Iodine-131 has a half-life of 8 days while caesium-137 has a half-life of over 30 years. The IAEA has developed a method that weighs the "radiological equivalence" for different elements.[37] TEPCO has published estimates using a simple-sum methodology,[38]

According to a June 2011 report of the International Atomic Energy Agency (IAEA), at that time no confirmed long-term health effects to any person had been reported as a result of radiation exposure from the nuclear accident.[39]

In a leaked TEPCO report dated June 2011, it was revealed that plutonium-238, −239, −240, and −241 were released "to the air" from the site during the first 100 hours after the earthquake, the total amount of plutonium said to be 120 billion becquerels (120 GBq) — perhaps as much as 50 grams. The same paper mentioned a release of 7.6 trillion becquerels of neptunium-239 – about 1 milligram. As neptunium-239 decays, it becomes plutonium-239. TEPCO made this report for a press conference on 6 June, but according to Mochizuki of the Fukushima Diary website, the media knew and "kept concealing the risk for 7 months and kept people exposed".[40][unreliable source?]

According to one expert, the release of radioactivity is about one-tenth that from the Chernobyl disaster and the contaminated area is also about one-tenth that that of Chernobyl.[41]

Air releases

A 12 April report prepared by NISA estimated the total air release of iodine-131 and caesium-137 at between 370 PBq and 630 PBq, combining iodine and caesium with IAEA methodology.[42] On 23 April the NSC updated its release estimates, but it did not reestimate the total release, instead indicating that 154 TBq of air release were occurring daily as of 5 April.[43][44]

On 24 August 2011, the Nuclear Safety Commission (NSC) of Japan published the results of the recalculation of the total amount of radioactive materials released into the air during the incident at the Fukushima Daiichi Nuclear Power Station. The total amounts released between 11 March and 5 April were revised downwards to 130 PBq for iodine-131 (I-131) and 11 PBq for caesium-137 (Cs-137). Earlier estimations were 150 PBq and 12 PBq.[45]

On 20 September the Japanese government and TEPCO announced the installation of new filters at reactors 1, 2 and 3 to reduce the release of radioactive materials into the air. Gases from the reactors would be decontaminated before they would be released into the air. In the first half of September 2011 the amount of radioactive substances released from the plant was about 200 million becquerels per hour, according to TEPCO, which was approximately one-four millionths of the level of the initial stages of the accident in March.[46]

According to TEPCO the emissions immediately after the accident were around 220 billion becquerel; readings declined after that, and in November and December 2011 they dropped to 17 thousand becquerel, about one-13 millionth the initial level. But in January 2012 due to human activities at the plant, the emissions rose again up to 19 thousand becquerel. Radioactive materials around reactor 2, where the surroundings were still highly contaminated, got stirred up by the workers going in and out of the building, when they inserted an optical endoscope into the containment vessel as a first step toward decommissioning the reactor.[47][48]

Iodine-131

A widely cited Austrian Meteorological Service report estimated the total amount of I-131 radiation released into the air as of 19 March based on extrapolating data from several days of ideal observation at some of its worldwide CTBTO radionuclide measuring facilities (Freiburg, Germany; Stockholm, Sweden; Takasaki, Japan and Sacramento, USA) during the first 10 days of the accident.[29][49] The report's estimates of total I-131 emissions based on these worldwide measuring stations ranged from 10 PBq to 700 PBq.[49] This estimate was 1% to 40% of the 1760 PBq[49][50] of the I-131 estimated to have been released at Chernobyl.[29]

A later, 12 April 2011, NISA and NSC report estimated the total air release of iodine-131 at 130 PBq and 150 PBq, respectively – about 30 grams.[42] However, on 23 April, the NSC revised its original estimates of iodine-131 released.[43] The NSC did not estimate the total release size based upon these updated numbers, but estimated a release of 0.14 TBq per hour on 5 April.[43][44]

On 22 September the results were published of a survey conducted by the Japanese Science Ministry. This survey showed that radioactive iodine was spread northwest and south of the plant. Soil samples were taken at 2,200 locations, mostly in Fukushima Prefecture, in June and July, and with this a map was created of the radioactive contamination as of 14 June. Because of the short half-life of 8 days only 400 locations were still positive. This map showed that iodine-131 spread northwest of the plant, just like caesium-137 as indicated on an earlier map. But I-131 was also found south of the plant at relatively high levels, even higher than those of caesium-137 in coastal areas south of the plant. According to the ministry, clouds moving southwards apparently caught large amounts of iodine-131 that were emitted at the time. The survey was done to determine the risks for thyroid cancer within the population.[51]

Tellurium-129m

On 31 October the Japanese ministry of Education, Culture, Sports, Science and Technology released a map showing the contamination of radioactive tellurium-129m within a 100-kilometer radius around the Fukushima No. 1 nuclear plant. The map displayed the concentrations found of tellurium-129m – a byproduct of uranium fission – in the soil at 14 June 2011. High concentrations were discovered northwest of the plant and also at 28 kilometers south near the coast, in the cities of Iwaki, Fukushima Prefecture, and Kitaibaraki, Ibaraki Prefecture. Iodine-131 was also found in the same areas, and most likely the tellurium was deposited at the same time as the iodine. The highest concentration found was 2.66 million becquerels per square meter, two kilometers from the plant in the empty town of Okuma. Tellurium-129m has a half-life of 6 days, so present levels are a very small fraction of the initial contamination. Tellurium has no biological functions, so even when drinks or food were contaminated with it, it would not accumulate in the body, like iodine in the thyroid gland.[52]

Caesium-137

On 24 March, the Austrian Meteorological Service report estimated the total amount of caesium-137 radiation released into the air as of 19 March based on extrapolating data from several days of ideal observation at a handful of worldwide CTBTO radionuclide measuring facilities. The agency estimated an average being 5,000 TBq daily.[29][49] Over the course of the disaster, Chernobyl put out a total of 85,000 TBq of caesium-137.[29] However, later reporting on 12 April estimated total caesium releases at 6,100 TBq to 12,000 TBq, respectively by NISA and NSC – about 2–4 kg.[42] On 23 April, NSC updated this number to 0.14 TBq per hour of caesium-137 on 5 April, but did not recalculate the entire release estimate.[43][44]

Strontium 90